Memory of Sound

by Jia Hu

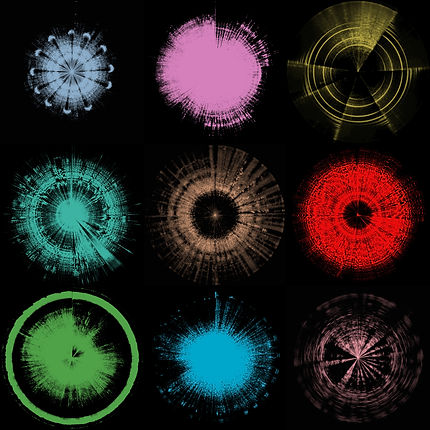

This project explores the relationship between sound, memory, and identity through an interactive visualization created in TouchDesigner. Using real-time audio input, the system maps sound data onto a three-dimensional coordinate system, generating unique visual traces along the x, y, and z axes. These traces form "sound wheels," reminiscent of tree growth rings, where each pattern is a direct imprint of the sound that produced it.

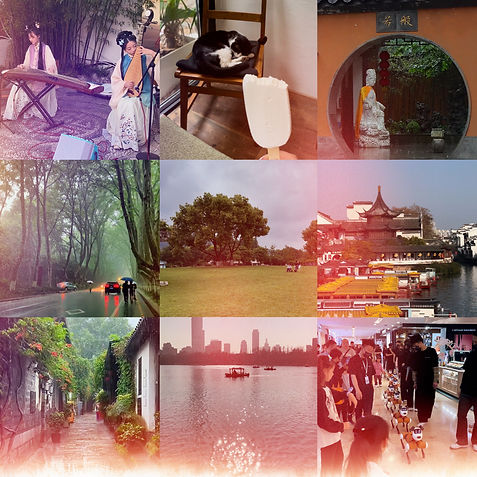

Drawing from my own experiences, I incorporate the distinct sounds of my hometown, Nanjing, China—early morning cuckoo calls, the rhythmic patter of summer rain, the hum of cicadas, melodies from elders practicing traditional instruments in public parks, the purring of stray neighborhood cats, the resonant chimes of Jiming Temple’s bells, and the familiar cadence of subway announcements. These auditory landscapes, once ephemeral, are now made tangible through visual translation.

The Story

Sound plays a fundamental role in shaping memory and identity. Certain auditory experiences become deeply embedded in our consciousness, encoding emotions and imagery from specific moments in time. When we encounter these sounds again, they act as triggers, recalling stored memories and reconnecting us to past experiences. Sound constructs a sense of belonging, nostalgia, and cultural identity, particularly in the context of personal and collective memory.

Through computational processes, past recordings are transformed into patterns, while real-time interaction allows new sounds—spoken words, singing, or ambient noise—to be immediately visualized. In doing so, I hope this project not only preserves but reinterprets the fleeting nature of sound, offering a space to reflect on its role in shaping personal and cultural narratives.

Design Process

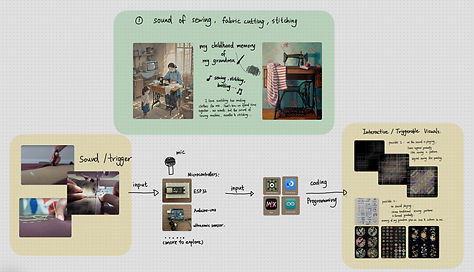

Brainstorming & Prototyping

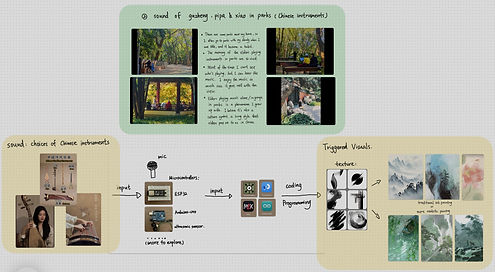

The project began with an initial brainstorming phase. I sketched out design concepts and visual prototypes to explore possible directions.

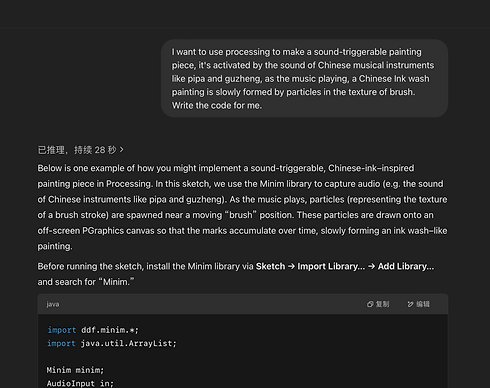

Exploring Technologies & Developing Sound-Interactive Prototypes

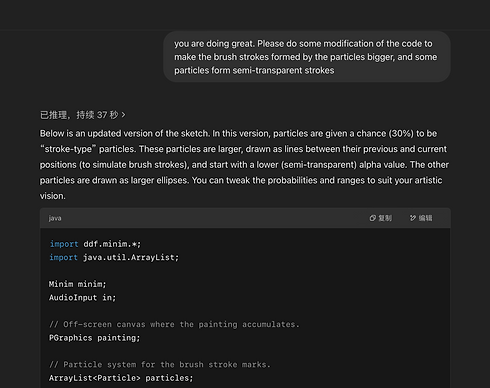

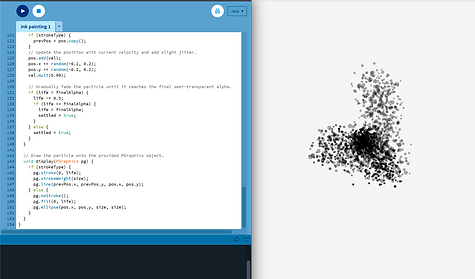

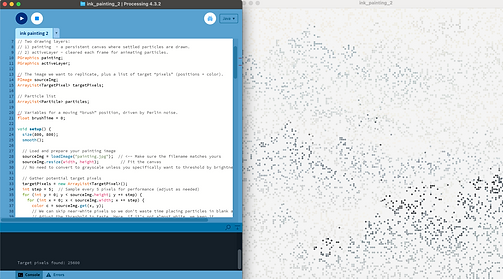

In this phase I focused on experimenting with Processing, inputting code to test how particles could form specific images when responding to music input. Since I have limited coding experience, I used ChatGPT to help generate code based on my desired visual effects.

Initially, the particles did not form the intended visual patterns. This led to an iterative process of refining prompts and adjusting the code through multiple interactions with ChatGPT. After several rounds of modifications, I successfully achieved the desired visual effect.

Next, I continued using Processing to develop an interactive animation that responds to sound—a small green circle that reacts to breathing. As the user exhales, the circle expands and gradually becomes transparent; as they inhale, it shrinks back to its original size. This mini-project served as an initial exploration of how sound can trigger organic, life-like visual interactions. Through further experimentation, I discovered that the circle also responded to variations in volume and pitch, this was the foundation for my final artwork.

Breathing Test

Singing Test

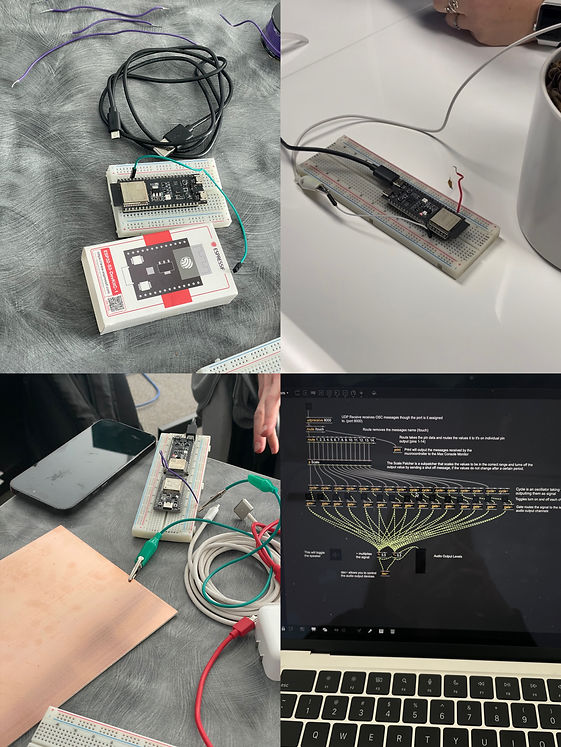

Experimenting with Arduino IDE & MAX MSP

I explored the possibility of using conductive materials and microcontrollers to trigger sound generation. By connecting conductive materials to microcontrollers, I attempted to send touch interaction data into Arduino IDE and MAX MSP to produce different sounds and musical outputs. However, I was unable to achieve precise control over the generated sounds to match the intended auditory experience, leading me to abandon this approach.

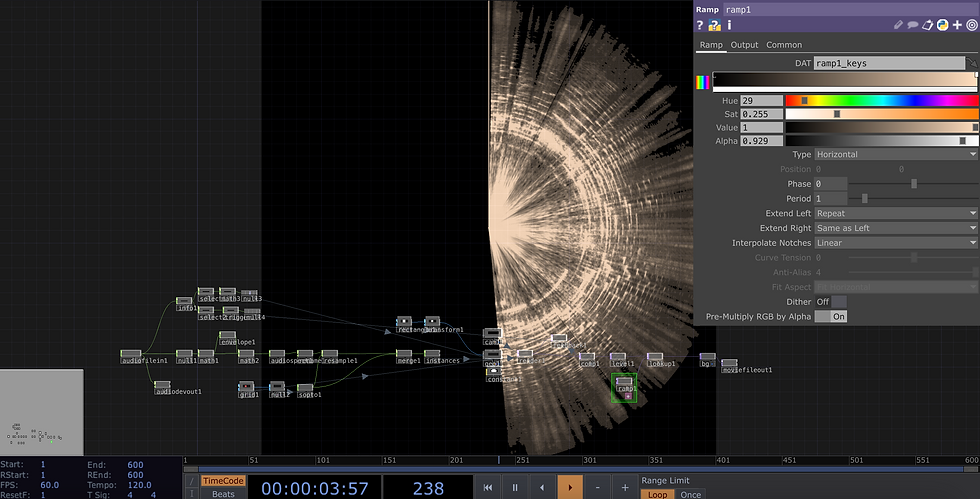

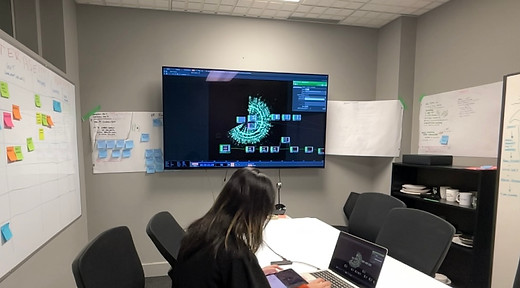

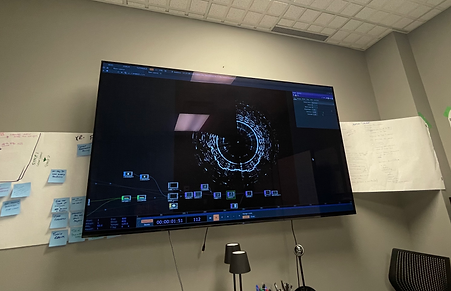

Final Development: Transitioning to TouchDesigner

After evaluating various options, I shifted my focus to TouchDesigner, teaching myself through online tutorials. Building upon the prototype developed in Processing, I leveraged TouchDesigner’s visual programming capabilities to create more dynamic and diverse visual effects without the need for extensive coding.

Through multiple exploration and refinement, successfully visualized real-time sound data into a generative sound wheel, achieving the intended interactive art experience.

Here's the TouchDesigner workflow:

Future Steps

Moving forward, this project holds great potential for expanding the visual possibilities of sound-generated patterns.

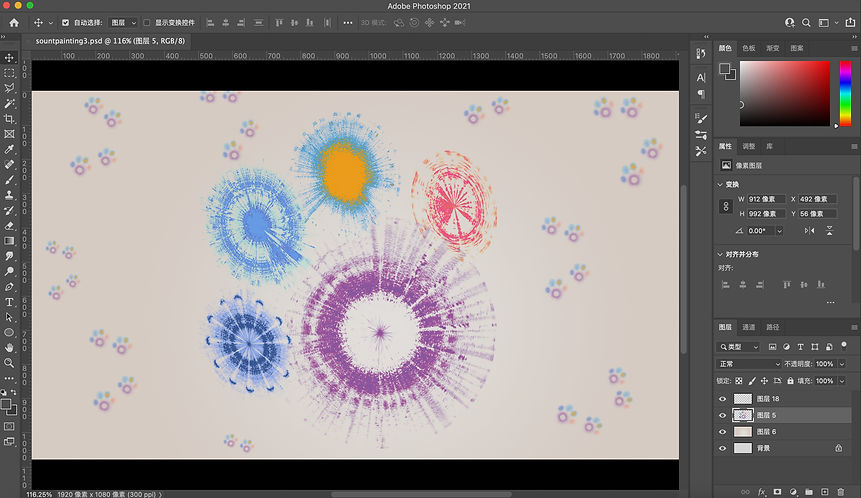

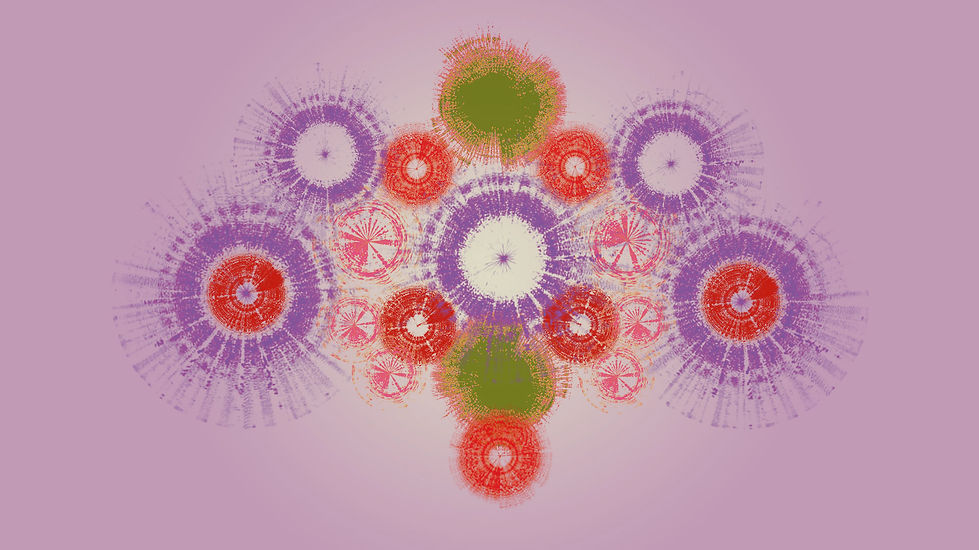

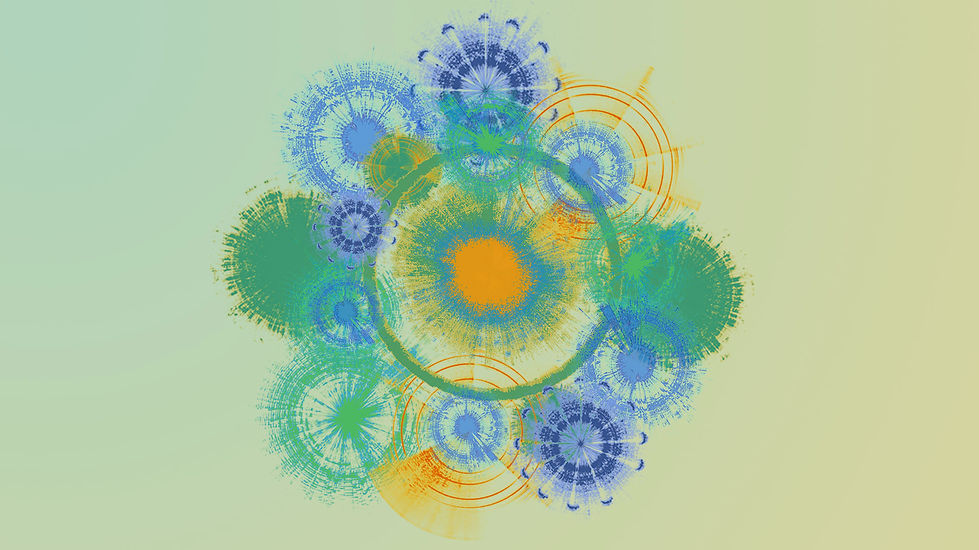

Currently, I have experimented with altering the colours of sound wheels using Photoshop and creating collages of multiple sound patterns. These early prototypes demonstrate how variations in sound input can result in unique and aesthetically compelling imagery. In the next phase, I plan to integrate real-time colour modulation, layering effects, and interactive elements in TouchDesigner to push the boundaries of sound-responsive visuals.

TouchDesigner offers an exciting avenue for this development, as its real-time generative capabilities allow for more complex visual outputs without the constraints of traditional coding. Additionally, I am interested in incorporating machine learning models to analyze and categorize different sound patterns, enabling a more refined and intentional aesthetic transformation. This could open possibilities for personalized sound visualizations based on cultural, emotional, or environmental soundscapes, making the project an even more immersive and meaningful artistic exploration.

ACKNOWLEDGEMENTS

Supervisor

Dr. Patrick Parra Pennefather

Special Thanks to:

Davis Heslep, Nancy Lee, Loretta Todd